TECHNOLOGY R&D PROJECTS

Three technology research & development projects

Focused on developing essential resources for realistically modeling patients, imaging systems, and virtual readers.

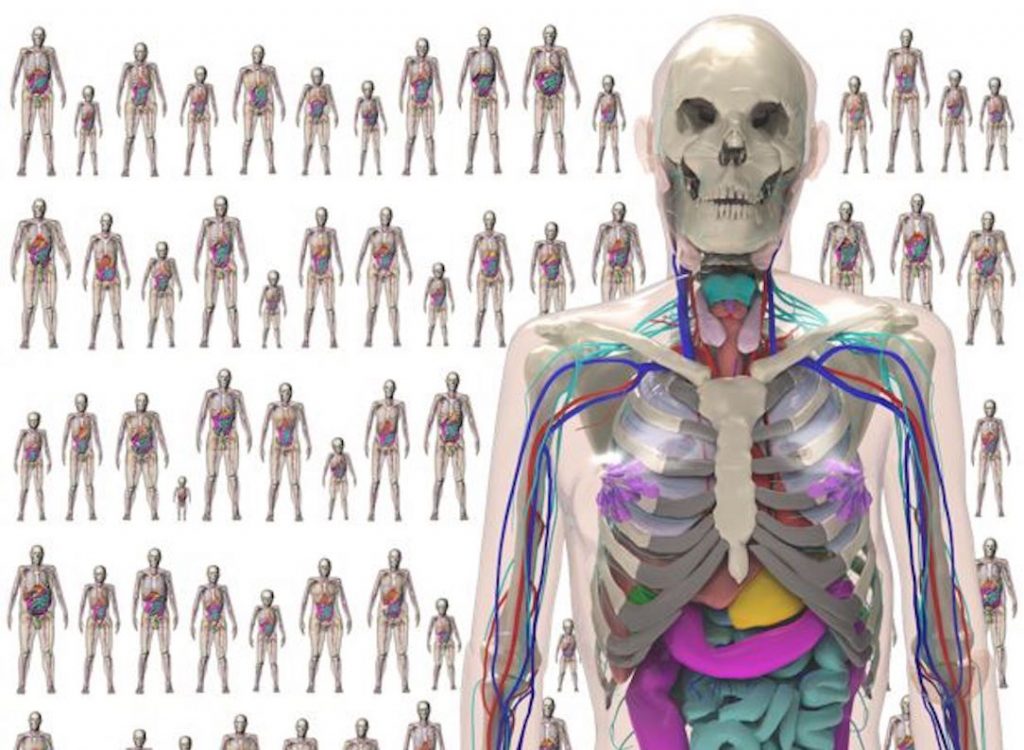

TR&D 1 - Virtual Patients

We are developing a framework from which researchers can generate vast populations of realistic, customizable virtual subjects for 3D and 4D research, significantly advancing human modeling to enable virtual imaging trials.

Principal Investigator: Paul Segars

Virtual imaging trials require a virtual patient population, which is provided by computational phantoms which model the patient anatomy and physiology. Acquired imaging data of a computer phantom can be generated using virtual scanners or computerized models of the imaging process, such as those developed in TR&D2. Through this combination, experimental data can be generated entirely on the computer. The advantage in using such studies is that, unlike actual patients, the exact anatomy of the phantom is known, providing a “gold standard” or “ground truth” from which to quantitatively evaluate and improve imaging devices and techniques.

For virtual imaging trials, it is essential to have computational phantoms that are realistic; otherwise, the results will not be indicative of what would occur in live subjects. Phantoms must realistically model patient anatomy, including any factors that could affect medical imaging. Computational phantoms must also be able to model the variability indicative of a clinical population as anatomy changes from person to person. With the move toward personalized medicine, optimizations of new imaging devices and techniques should be specific to the patient and the population. A library of phantoms modeling a range of anatomies will provide an important tool to investigate personalized techniques, moving away from a one-size-fits-all approach.

Using state-of-the-art techniques, we are developing an extensive series of realistic, computational phantoms to represent the population at large. The result will be the first comprehensive library of computational phantoms that accurately captures the anatomical and physiological variability of individuals across different factors. The tools will be packaged into a framework from which researchers will be able to generate virtual populations on demand with user-defined anatomical and physiological characteristics. Combined with the resources developed in TR&D 2 and 3, the virtual subjects will enable simulation-based evaluation and optimization on a wide variety of 3D and 4D imaging applications with the initial focus on CT.

The phantoms we develop (known as the XCAT phantoms) have already gained wide use in imaging research. Currently, there are over 400 academic groups around the world as well as several research labs within commercial imaging companies (GE Healthcare, Siemens Healthineers, Philips, Samsung, and Microsoft) who have requested and gained access to the phantoms. As the phantoms are enhanced with new capabilities throughout this work, they will continue to grow to find application in many exciting areas in medical imaging.

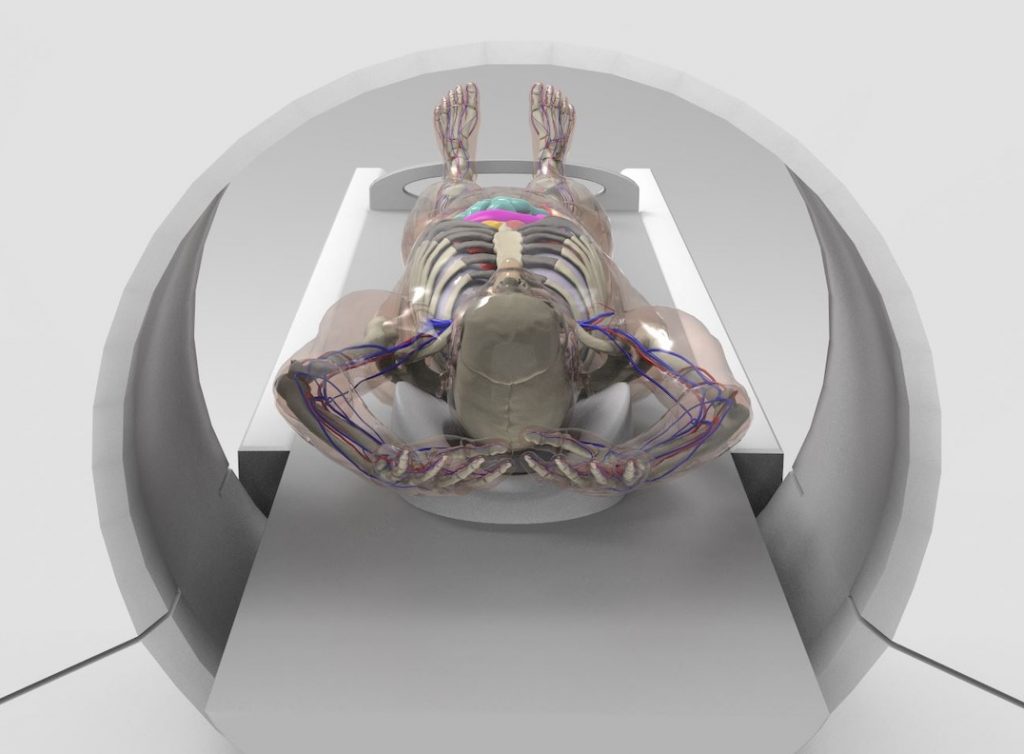

TR&D 2 - Virtual Scanners

We are developing a platform for rapid, accurate, and realistic CT simulations capable of generating 3D and 4D CT images and radiation dose estimates for highly detailed patient-specific anatomies with physiological and perfusion dynamics.

Principal Investigators: Ehsan Samei, Ehsan Abadi

Virtual imaging trials are of great value to CT, where there is a substantial need to assess and optimize a multitude of hardware and software choices, parameters, and protocols across clinical tasks and applications. For this purpose, it is essential to have a simulator that realistically models the acquisition geometry and physics of CT scanners.

We are developing a modular simulation platform for CT that builds on our expertise and prior experience in developing simulation tools for x-ray-based imaging modalities. The platform combines the high spatio-temporal performance provided by ray-tracing, precise scatter and radiation dose estimates from Monte Carlo, parallelized computations on GPU, and precise modeling of CT geometry and components to achieve high levels of realism and scanner specificity required for conducting virtual imaging trials in a time-efficient manner.

The platform will model specific CT systems, with additional flexibility of accommodating user-defined and generic (i.e., manufacturer-neutral) specifications across a variety of user-specified protocols, including respective reconstruction algorithms and features. Combined with the resources developed in TR&D 1 and TR&D 3, and informed by our Collaborative Projects, the simulator will provide realistic large-scale virtual datasets that enable evaluation and optimization across a wide variety of CT technologies.

We have recently developed a state-of-the-art hybrid CT simulator (DukeSim) that simulates image sinograms and absorbed radiation dose using a hybrid model combining ray-tracing for estimating the primary and Monte Carlo for estimating the scatter component of the signal and radiation dose. Combined with the computational phantoms developed in TR&D 1, DukeSim has enabled various imaging studies involving the evaluation and optimization of a wide variety of CT technologies. TR&D 2 will expand DukeSim to model various clinical and emerging scanners, with detailed modeling of their specific components and features as well as their processing methods. The upgraded DukeSim will be verified and validated against experimental measurements from a broad range of CT systems.

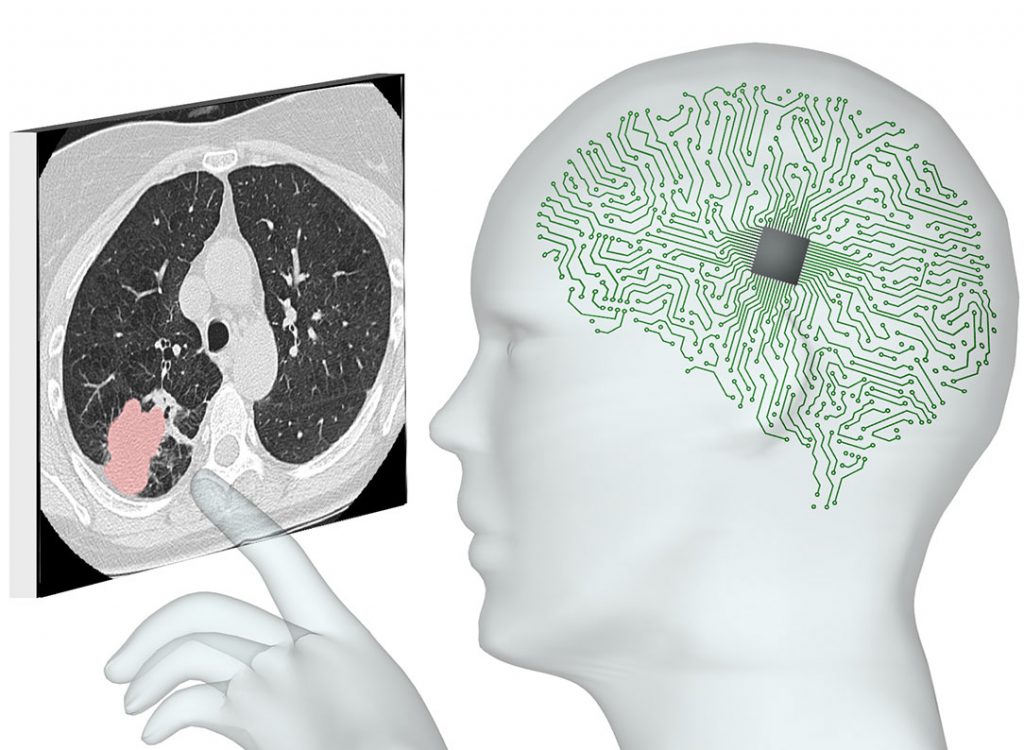

TR&D 3 - Virtual Readers

Virtual imaging trials culminate in the analysis of medical images by virtual readers. We are seeking ways to emulate and extend the clinical reading of images for specific tasks such as lesion detection, classification, or measurement.

Principal Investigators: Joseph Lo, Cynthia D. Rudin

In the clinic, medical images are interpreted by radiologists to detect or diagnose disease. We are creating the computational equivalent in the form of virtual readers that span three categories: observer models, radiomics, and deep learning. These virtual readers can analyze the vast amounts of data in imaging trials, be they clinical or simulated. Our goal is to develop easy-to-use tools that can generalize to a wide range of medical image needs, such as radiation dose reduction, radiologist education, and clinical adoption of artificial intelligence (AI).

Our virtual readers benefit from the key advantage of our Center, which creates medical images using controllable ground truth for both normal anatomy and disease. The project builds on a foundation of our research in all key areas. As part of this work, we have quantified the robustness of CT radiomics features across a diverse set of CT imaging conditions. For low-contrast CT lesions, we have correlated detection performance between humans and a wide range of observer models. We have also created a dataset of over 30,000 chest CTs and developed classification models for over 80 abnormalities.